The Empathy Algorithm Grid: How Builders and Poets Will Shape the Future of AI

Designing AI that doesn’t just work — but feels right.

Most companies scale logic. Very few scale love.

And many end up in the worst place of all — systems that are fast, but unloved.

They’ve built something impressive that no one wants to talk to.

They deploy bots that resolve, but don't reassure. Systems that work — but feel wrong.

And in those moments that matter — a blocked card, a missed refund, a scary medical claim — customers don’t just want fast. They want felt.

If you’re in customer experience, this is your job now: not just designing conversations — but designing emotional infrastructure at scale.

You're not building one system. You're influencing how tens of thousands — sometimes millions — of people feel in their most vulnerable moments.

That’s why we need a new way to think about AI Assistants. Not as tools. But as touchpoints of trust.

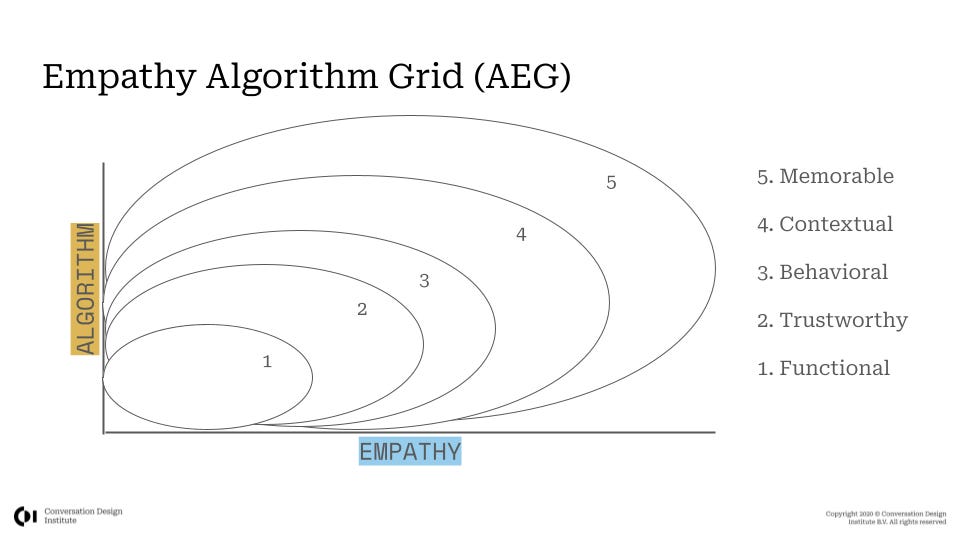

This is where the Empathy Algorithm Grid (EAG) comes in.

Growing Up in Design

When I entered the field of conversation design, contact centers seemed to live in a deceptively simple truth: success lives in the balance between three forces — automation, customer experience, and cost to serve.

Obvious in theory. Rare in practice.

Again and again, I watched companies over-invest in efficiency — chasing seconds off calls, optimizing routing, pushing containment — only to erode trust, drive up escalations, and bleed cost back into the system.

Others fell in love with delightful experiences, but failed to scale them. Their best work became artifacts — admired, but abandoned.

Everyone’s buying shovels. Few know how to dig.

Technology scales fast. Emotional design doesn’t — unless you build for it.

Real design matures holonically.

Each layer includes and transcends the last — a concept introduced by Arthur Koestler, the Hungarian-British author and polymath, who coined the term holon to describe entities that are simultaneously wholes and parts.

This idea was later expanded by Ken Wilber, the American philosopher behind Integral Theory, who argued that development in people, systems, and consciousness follows a nested pattern of growth — where new layers build on, rather than replace, the old.

Great Assistants evolve the same way.

First, bots answered questions. Then they solved problems. Now, the best don’t just respond — they relate. They don’t just work. They feel right.

And if we should these level of development holonically, it would look like this.

That kind of experience doesn’t emerge from intent-matching. It emerges from intentional culture.

Builders and Poets

Plato, in his vision of an ideal society, described two essential forces: the builders, who shape structure and order — and the poets, who bring meaning, soul, and spirit.

That dynamic still holds.

In Conversational AI, the builders are the engineers and architects — the ones scaling logic, automation, and flow. The poets are the designers, writers, and empaths — the ones who make it sound human, feel human, be human.

One without the other creates collapse.

Most organizations are builder-heavy. They track containment, average handle time, and automation rate. Useful metrics — but they don’t buy loyalty.

Containment doesn’t equal connection. Speed doesn’t scale trust.

People remember how you made them feel, not how fast you made them feel it.

The Assistants of tomorrow won’t win by being faster. They’ll win by being felt.

And just like in any true holon — where each layer is both a whole and a part — you need both builders and poets to shape each level. Structure without soul collapses. Soul without structure drifts. Only when they work together does the system become something greater than the sum of its parts.

The Reflection Principle

Your Assistant reflects your culture.

It tells your customers what you actually care about.

In builder-led orgs, Assistants are efficient but cold. They scale logic, but not love. This lack of love will likely stand in the way of unlocking more complex journeys that require longer conversations.

In human-led orgs, the Assistant feels thoughtful, calm, and caring — like someone was thinking about the person behind the problem.

Every Assistant whispers the values of the team that built it.

You don’t just build AI. You broadcast your DNA.

The Empathy Algorithm Grid

This is why I created the Empathy Algorithm Grid (EAG).

The Grid maps two forces:

X-axis: Empathy — from transactional to relational

Y-axis: Algorithm — from low automation to high automation

Each quadrant tells a different story:

Bottom Left – Discovering: You’re just getting started. A few basic flows. Maybe a FAQ bot. Low empathy, low automation. You’re learning — but still invisible.

Top Left – Squandering: You’ve invested heavily in automation — but forgot the human. Customers avoid your Assistant. It’s fast, but soulless. You’ve scaled something nobody wants to talk to.

Bottom Right – Wandering: You’ve created warm, novel experiences — but there’s no structure. No business case. Delight without discipline. Poets without builders.

Top Right – Leading: This is where the magic happens. Builders and poets working together. Automation meets empathy. Systems that scale and resonate. When these forces are aligned, cost to serve goes down — not because you’re cutting corners, but because you’re earning trust.

The craft is in the pacing: evolving empathy and algorithm side by side. That’s how you get results that last.

That’s not a destination. It’s a path.

You may have seen me reference a more detailed version of this grid in keynotes or CDI courses — one that uses “Automation Rate” and “Customer Experience” as the axes. That version is designed for applied use inside teams, where measurement and diagnostics matter. It’s one of the things we use when doing maturity assessments.

This version — the Empathy Algorithm Grid — is a simpler, more symbolic framing meant to reach a wider audience. It’s punchier, but the underlying principles are the same. And in future pieces, I’ll continue to use both: the practical for application, and the poetic for perspective.

Most orgs zigzag through the grid — from Discovering to Wandering, sometimes Squandering hard-won gains.

The path to Leading is real, but it’s not linear.

It takes pacing. Alignment. And a shared vision between builders and poets.

The EAG helps you name where you are — and see where you’re going.

Because you can’t scale what you haven’t mapped. Whether you’re using a platform like Rasa, Kore.ai, Cognigy, Quiq, Yellow.ai, or Teneo — or exploring newer entrants like Parlant (check them out!) — the principles still apply.

What matters most is designing with intention: balancing automation with empathy, and building systems that scale without losing their soul – otherwise you might end up losing your customers.

Two Brands, Two Paths

Let’s make this real.

Streamtel, a telecom giant, optimized everything. They trimmed 20% off handle time. Calls got shorter. So did patience. Customers bailed. Containment was up. Trust was down. Escalations exploded.

Maison Aurore, a luxury fashion brand, took the opposite route. They built a witty, charming Assistant. People smiled — until they needed something functional. It couldn’t support real transactions. Delight gave way to disappointment.

Streamtel ended up in Squandering — all speed, no soul.

Maison Aurore drifted into Wandering — high empathy, low structure.

Different paths, same result: low impact and rising costs.

You don’t scale or relate. You scale by relating.

At CDI, we worked with a global brand that had strong automation potential but weak emotional design. Within six months of introducing reflective phrases and trust-building language, escalations dropped by 30%.

That’s not a stat. That’s a shift in human experience.

That means that out of every 10 people who would’ve had to wait, explain, and fight to get what they needed — three of them just got it handled. No stress. No escalation. No friction.

Small emotional investments. Massive operational return.

Maturity, Language, and Trust

The EAG helps companies assess maturity. Not by intent count. But by emotional capability.

If your Assistant handles adult-level complexity, it can’t speak like a toddler.

Longer journeys. Higher stakes. More emotion.

As systems grow up, their language must grow with them:

Clear over clever.

Confident over rushed.

Calm over robotic.

Phrases like:

“I can imagine that’s frustrating.”

“Here’s exactly what we’ll do next.”

“You’re in the right place.”

These aren’t scripts. They’re scaffolding.

Because trust isn’t a feature. It’s the foundation.

Language isn’t the final layer. It’s the frame everything hangs on.

You’re not writing lines. You’re building loyalty.

This collaboration between structure and soul doesn’t just happen once — it repeats at every level. From a single sentence to an entire organizational system, each layer of your AI Assistant grows through deeper levels of functionality, trust, behavior, context, and memory. These layers form a kind of nested maturity — a holonic evolution — where builders and poets must keep showing up, again and again. The visual below brings it all together.

A Closing Reflection

If you work in customer experience today, you’re not just launching tools.

You’re shaping emotional infrastructure at scale.

Every day, you help people navigate blocked accounts, canceled flights, confusing terms, missing refunds. You hold their stress, their urgency, their confusion — and help them find ground.

You are not just designing systems. You are designing relief. At scale.

That is sacred work.

And right now, as we lay the foundations of AI cities, we face a decision:

If we leave it only to the builders, we get Dubai — efficient, impressive, but emotionally hollow.

If we leave it only to the poets, we get Venice — beautiful, fragile, and unsustainable.

We need both.

The Empathy Algorithm Grid is one way to bring them together.

If you want to map your Assistant, elevate your capability, or build systems that are as human as they are scalable — we’d love to talk.

Because people don’t just want faster bots.

They want systems that see them.

They want systems with soul.

And if you’re in this work — truly in it — you’re not building automation.

You’re building trust, one sentence at a time.

Join 35,000+ CX and AI leaders and practitioners from brands like Amazon, Comcast, Google, JPMorgan, and T-Mobile who read Power to the Poets.

Each week, you’ll get clear insights and thoughtful frameworks to help you speak confidently about using AI to scale trust, not just transactions.